Yikun HanI am an incoming PhD student in Information Sciences at the University of Illinois Urbana-Champaign. Previously, I received my Master's degree in Data Science from the University of Michigan. My research interests span the intersection of geometric deep learning, natural language processing, and AI for healthcare. I am fortunate to be advised by Prof. Halil Kilicoglu and Prof. Yue Guo at UIUC. During my time at Michigan, I was part of the CASI Lab, supervised by Prof. Ambuj Tewari, and closely collaborated with the AI Health Lab at the University of Texas at Austin. Email / GitHub / Curriculum Vitae / Google Scholar / LinkedIn |

|

Publications |

|

Teaching Machine Olfaction in an Undergraduate Deep Learning Course: An Interdisciplinary Approach Based on Chemistry, Machine Learning, and Sensory EvaluationYikun Han, Michelle Krell Kydd, Joseph Ward, Ambuj Tewari arXiv, 2025 code We integrated machine olfaction into an undergraduate deep learning course, introducing smell as a new modality alongside traditional data types. Hands-on activities and graph neural networks enhanced student engagement and comprehension. We discuss challenges and future improvements. |

|

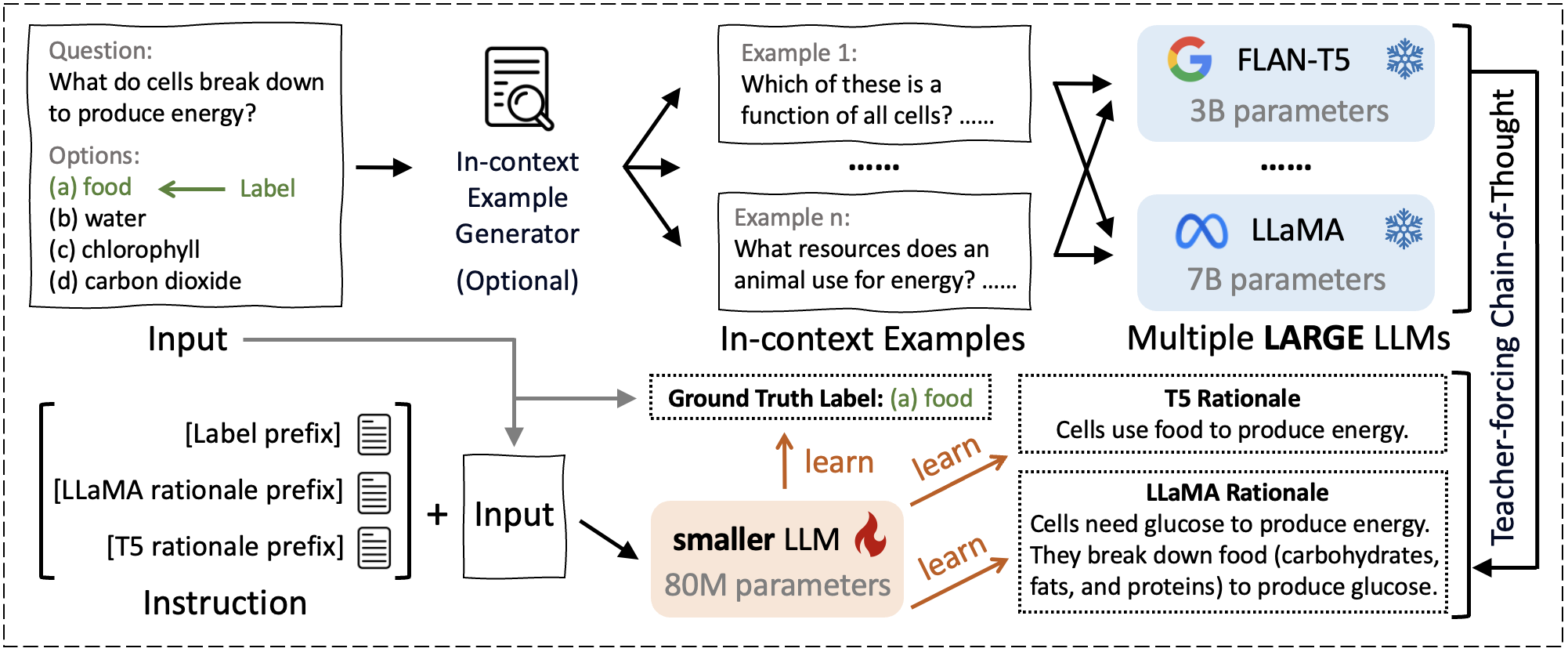

Beyond Answers: Transferring Reasoning Capabilities to Smaller LLMs Using Multi-Teacher Knowledge DistillationYijun Tian*, Yikun Han*, Xiusi Chen*, Wei Wang, Nitesh V. Chawla International Conference on Web Search and Data Mining (WSDM), 2025 paper / arxiv / code We present TinyLLM, a knowledge distillation approach that transfers reasoning abilities from multiple large language models (LLMs) to smaller ones. TinyLLM enables smaller models to generate both accurate answers and rationales, achieving superior performance despite a significantly reduced model size. |

|

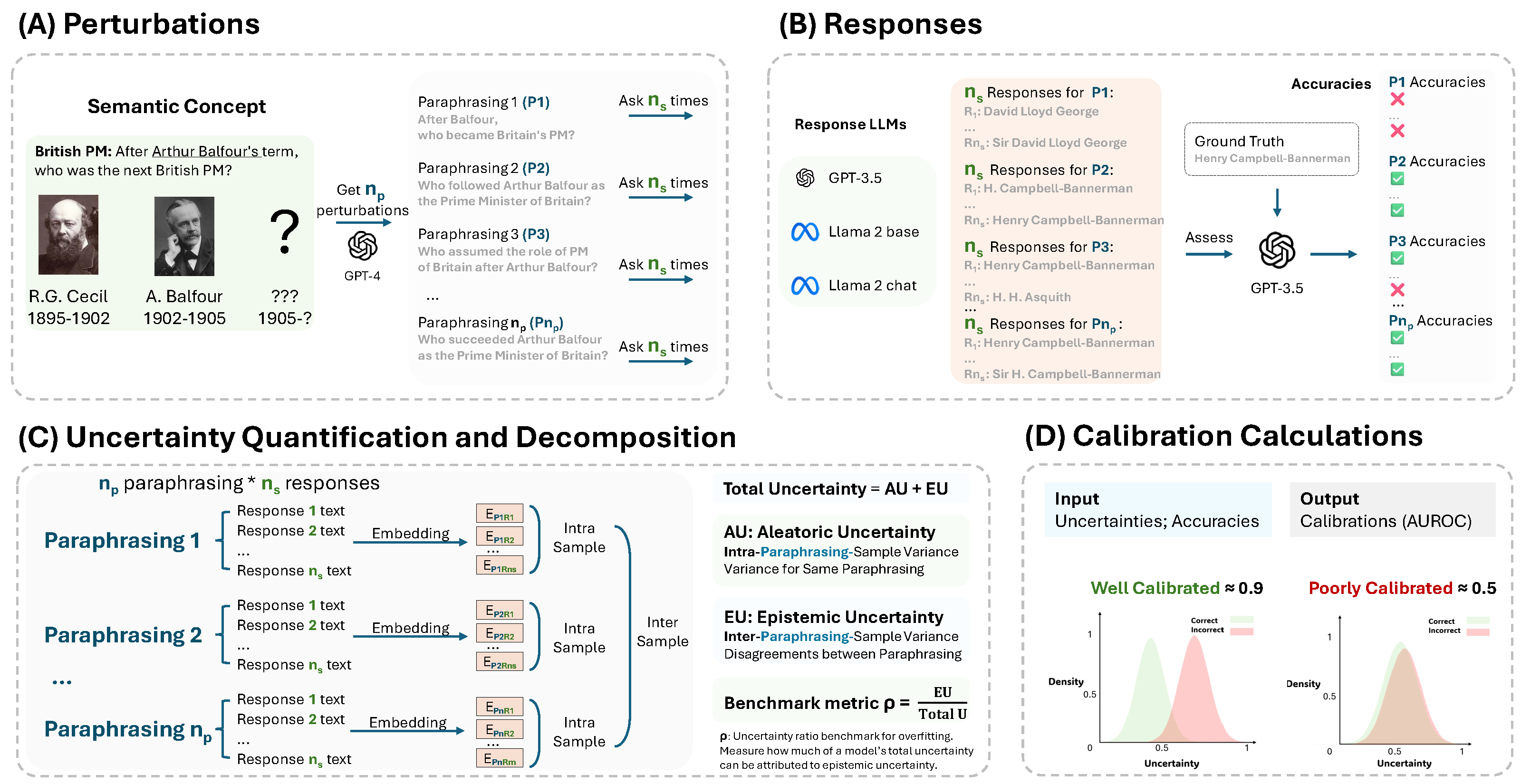

Mapping from Meaning: Addressing the Miscalibration of Prompt-Sensitive Language ModelsKyle Cox, Jiawei Xu, Yikun Han, Abby Xu, Tianhao Li, Chi-Yang Hsu, Tianlong Chen, Walter Gerych, Ying Ding AAAI Conference on Artificial Intelligence (AAAI), 2025 paper / code We explore prompt sensitivity in large language models (LLMs), where semantically identical prompts can yield vastly different outputs. By modeling this sensitivity as generalization error, we improve uncertainty calibration using paraphrased prompts. Additionally, we propose a new metric to quantify uncertainty caused by prompt variations, offering insights into how LLMs handle semantic continuity in natural language. |

|

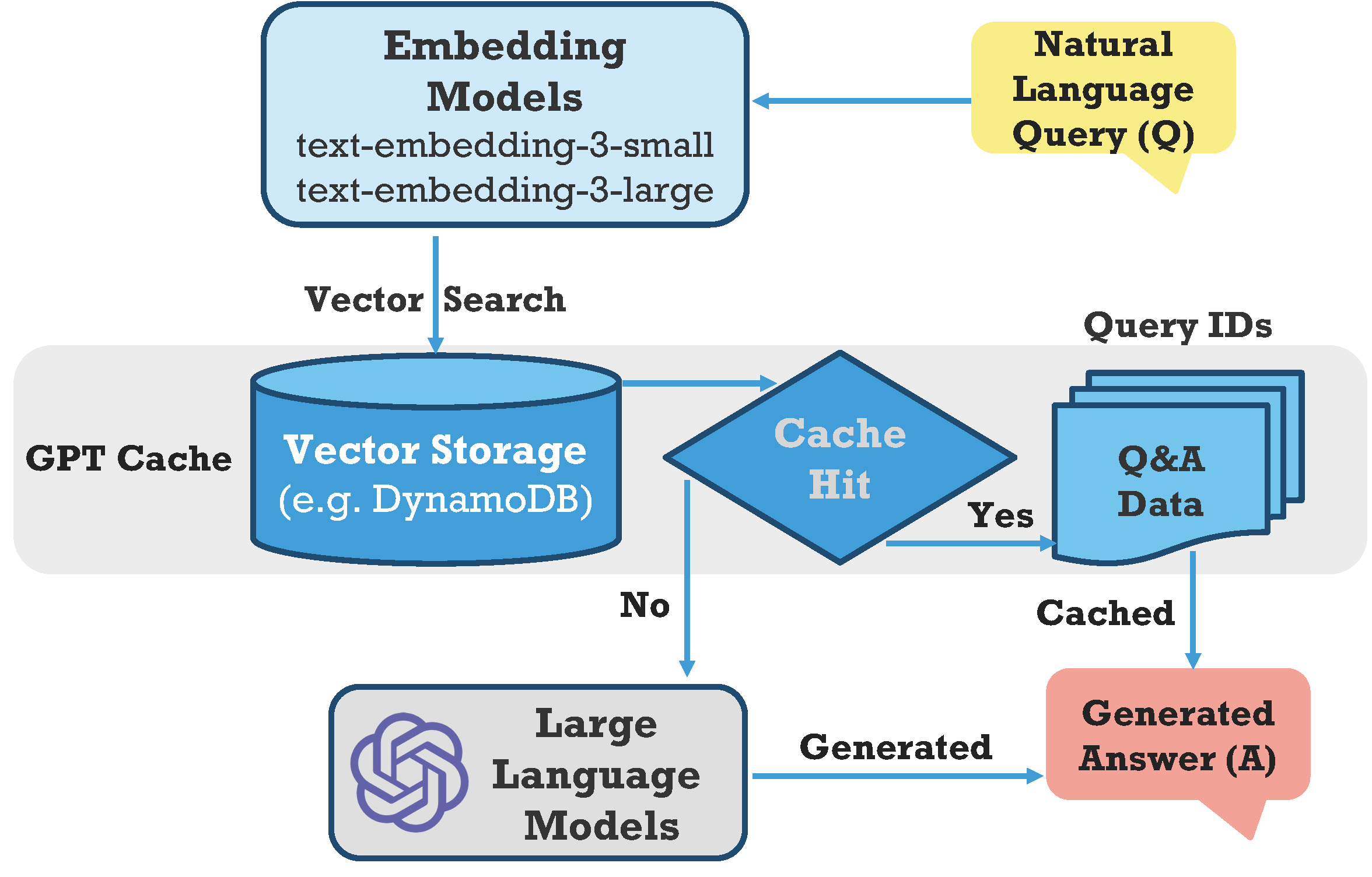

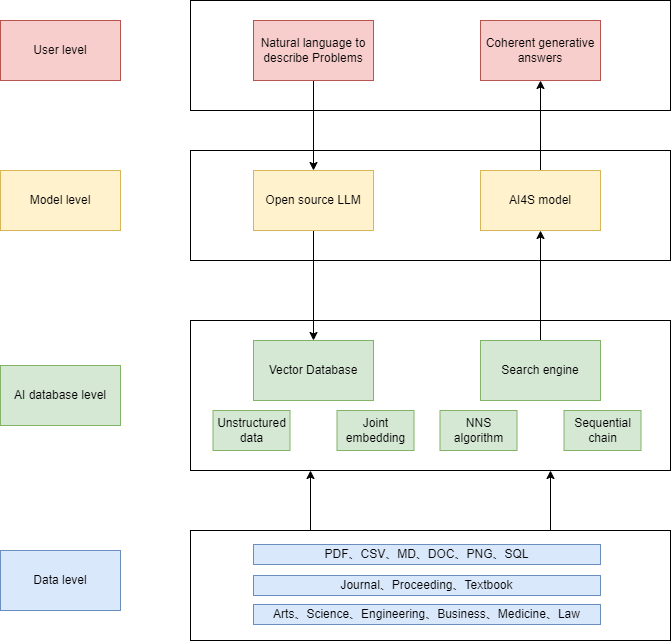

When Large Language Models Meet Vector Databases: A SurveyZhi Jing*, Yongye Su*, Yikun Han* Artificial Intelligence x Multimedia (AIxMM), 2025 paper / arxiv We survey the integration of Large Language Models (LLMs) and Vector Databases (VecDBs), highlighting VecDBs’ role in addressing LLM challenges like hallucinations, outdated knowledge, and memory inefficiencies. This review outlines foundational concepts and explores how VecDBs enhance LLM performance by efficiently managing vector data, paving the way for future advancements in data handling and knowledge extraction. |

|

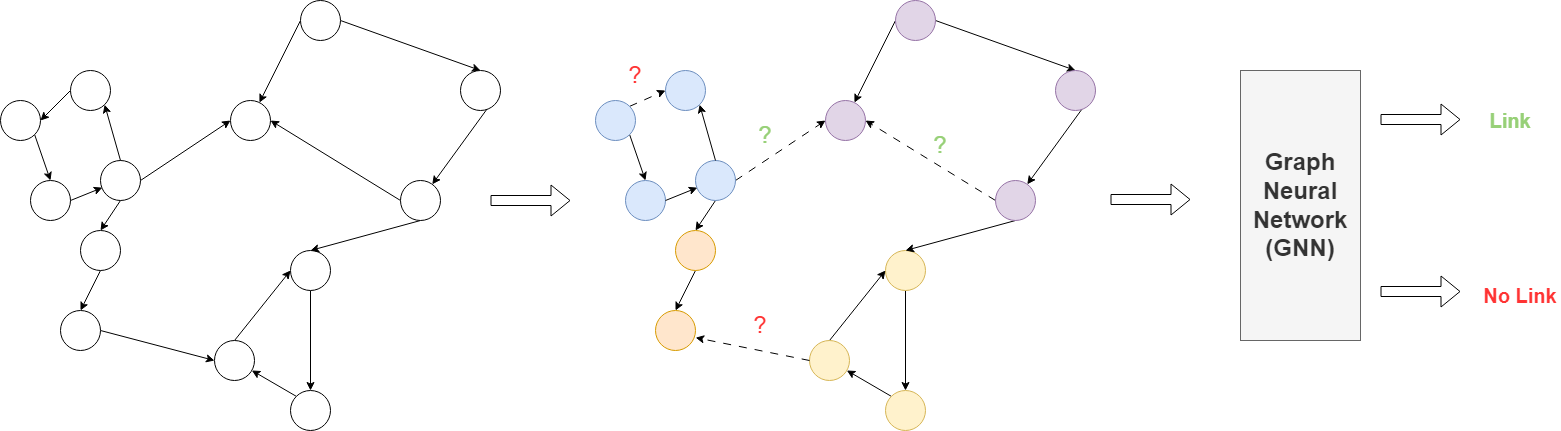

A Community Detection and Graph-Neural-Network-Based Link Prediction Approach for Scientific LiteratureChunjiang Liu*, Yikun Han*, Haiyun Xu, Shihan Yang, Kaidi Wang, Yongye Su Mathematics, 2024 paper We integrate the Louvain community detection algorithm with various GNN models to improve link prediction in scientific literature networks. This approach consistently boosts performance, with models like GAT seeing AUC increases from 0.777 to 0.823, demonstrating the effectiveness of combining community insights with GNNs. |

|

A Comprehensive Survey on Vector Database: Storage and Retrieval Technique, ChallengeYikun Han, Chunjiang Liu, Pengfei Wang arXiv, 2023 arxiv We review key algorithms for solving approximate nearest neighbor search in vector databases, categorizing them into hash-based, tree-based, graph-based, and quantization-based methods. Additionally, we discuss challenges and explore how vector databases can integrate with large language models for new opportunities. |

Competitions |

|

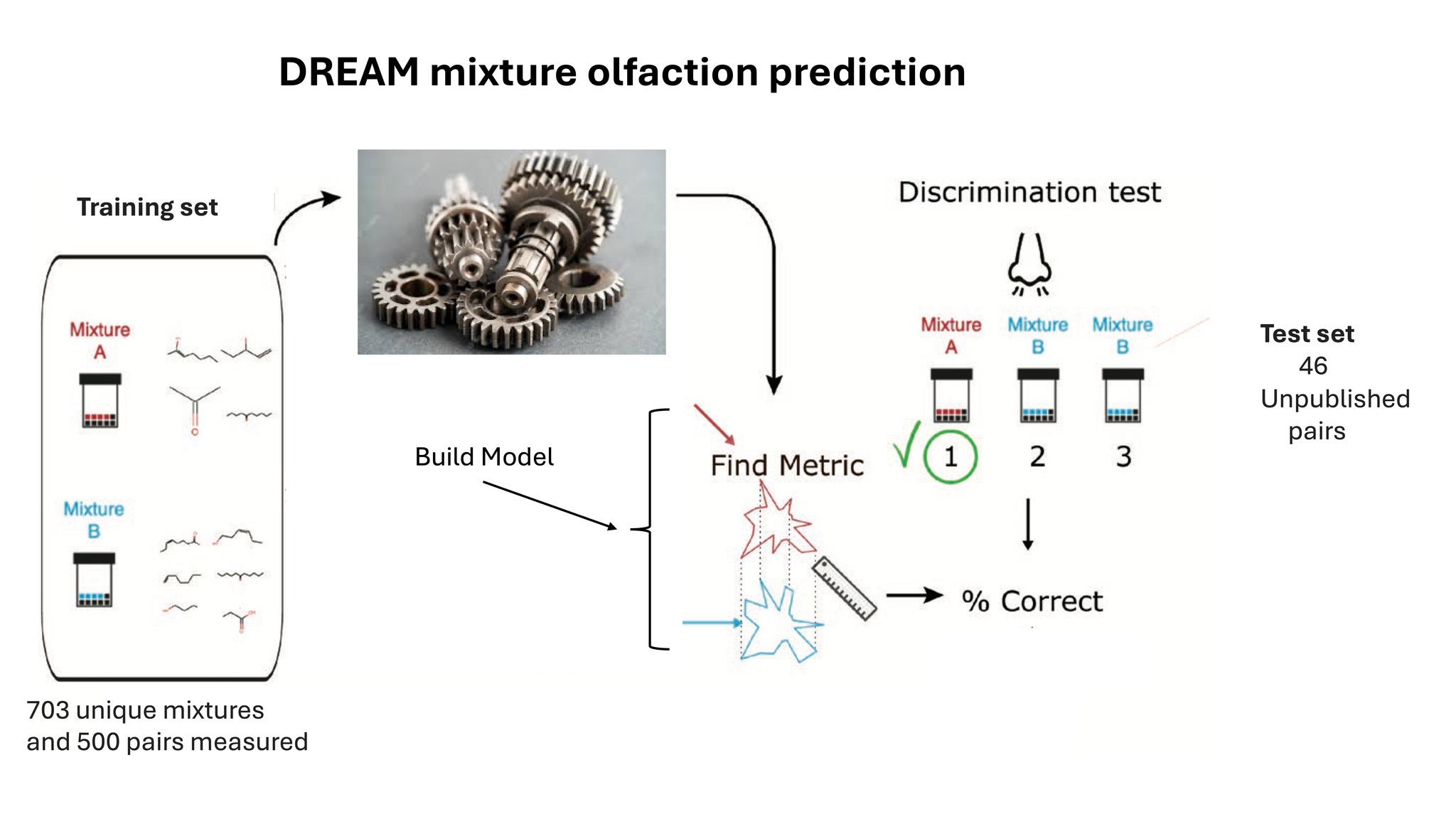

DREAM Olfactory Mixtures Prediction ChallengeYikun Han, Zehua Wang, Stephen Yang, Ambuj Tewari RECOMB/ISCB Conference on Regulatory & Systems Genomics with DREAM Challenges, 2024 writeup / code / website / news / slide We use pre-trained graph neural networks and boosting techniques to enhance odor mixture discriminability, transforming single molecule embeddings into mixture predictions with improved robustness and accuracy. |

Research Internships |

|

University of Michigan (Aug. 2023 - Present)Advisor: Prof. Ambuj Tewari Research Topics: [1] Graph Neural Networks [2] Molecular Property Prediction [3] Protein-Ligand Affinity Prediction |

|

University of Texas at Austin (Feb. 2024 - Present)Advisor: Prof. Ying Ding, Prof. Jiliang Tang Research Topics: [1] Graph Retrieval-Augmented Generation [2] Medical AI [3] Collaborator Recommendation |

|

University of Notre Dame (Dec. 2023 - Mar. 2024)Advisor: Prof. Nitesh V. Chawla Research Topics: [1] Knowledge Distillation [2] Multi-Teacher Collaboration [3] In-Context Learning |

|

Tianyuan Mathematical Center in Southwest China (May. 2022 - Nov. 2022)Advisor: Prof. Gang Chen Research Topics: [1] LAPACK Optimization [2] Parallel Computation for Large-Scale Matrices [3] High-Performance Matrix Factorization and Back Substitution |

Education |

|

University of Illinois Urbana-Champaign (Aug. 2025 - May. 2030)PhD Information Sciences |

|

University of Michigan (Aug. 2023 - May. 2025)Master Data Science GPA: 3.965/4.0 |

|

Sichuan University (Sep. 2019 - Jun. 2023)Bachelor Information Resources Management GPA: 3.87/4.0 Rank: 2/76 |

Awards |

| RSGDREAM Travel Award, 2024 Outstanding Graduate, 2023 Second Prize Scholarship 2022 Outstanding Student, 2021 Outstanding Student, 2020 |

Book List |

Click to view

| Title | Author | Date |

|---|---|---|

| Black Box | 伊藤詩織 | 07-02-25 |

| A Room of One's Own | Virginia Woolf | 06-23-25 |

| 雷雨 | 曹禺 | 06-18-25 |

| Terre des hommes | Antoine de Saint-Exupéry | 06-14-25 |

| 茶馆 | 老舍 | 06-04-25 |

| 悪意 | 東野圭吾 | 05-30-25 |

| 1587, a Year of No Significance | Ray Huang | 05-27-25 |

| The Little Mermaid | Hans Christian Andersen | 04-19-25 |

| Il Cavaliere Inesistente | Italo Calvino | 04-14-25 |

| Une Femme | Annie Ernaux | 03-29-25 |

| Soulstealers: The Chinese Sorcery Scare of 1768 | Philip Alden Kuhn | 03-29-25 |

| 黄金时代 | 王小波 | 03-23-25 |

| 秋园 | 杨本芬 | 02-28-25 |

| 我与地坛 | 史铁生 | 02-25-25 |

| The Rape of Nanking | Iris Chang | 02-20-25 |

| 在细雨中呐喊 | 余华 | 01-22-25 |

| The Worlds I See | Fei-Fei Li | 12-28-24 |

| 生死疲劳 | 莫言 | 11-09-24 |

| 攀岩技術教本 | 東秀磯 | 10-22-24 |

| 女ぎらい | 上野千鶴子 | 09-28-24 |

| Frankissstein: A Love Story | Jeanette Winterson | 09-07-24 |

| Deng Xiaoping and the Transformation of China | Ezra F. Vogel | 07-25-24 |

| 霸王别姬 | 李碧华 | 07-10-24 |

| Flowers for Algernon | Daniel Keyes | 07-10-24 |

| Murder on the Orient Express | Agatha Christie | 06-28-24 |

| 棋王·树王·孩子王 | 阿城 | 06-18-24 |

| Mere Christianity | Clive Staples Lewis | 06-15-24 |

| Aphorismen zur Lebensweisheit | Arthur Schopenhauer | 06-08-24 |

| Graph Kernels: State-of-the-Art and Future Challenges | Karsten Borgwardt | 05-19-24 |

| ラヴレター | いわい しゅんじ | 05-15-24 |

| 城南旧事 | 林海音 | 05-05-24 |

| L'Amica Geniale | Elena Ferrante | 04-29-24 |

| 历史深处的忧虑 | 林达 | 04-20-24 |

| 球状闪电 | 刘慈欣 | 04-16-24 |

| 西游记 | 吴承恩 | 04-09-24 |

| Il visconte dimezzato | Italo Calvino | 04-06-24 |

| 哭泣的骆驼 | 三毛 | 04-03-24 |

| The Lost Salt Gift of Blood | Alistair MacLeod | 03-27-24 |

| 置身事内 | 兰小欢 | 03-14-24 |

| 我们仨 | 杨绛 | 03-03-24 |

| Il barone rampante | Italo Calvino | 02-28-24 |

| 容疑者Xの献身 | 東野圭吾 | 02-14-24 |

| Συμπόσιον | Plato | 02-06-24 |

| Cien años de soledad | García Márquez | 02-05-24 |

| Crónica de una muerte anunciada | García Márquez | 01-08-24 |

| 台北人 | 白先勇 | 01-01-24 |

| Visualization Analysis & Design | Tamara Munzner | 11-27-23 |

| 沉默的大多数 | 王小波 | 11-24-23 |

| 上野先生、フェミニズムについてゼロから教えてください! | 上野千鶴子 | 11-20-23 |

| Pequeño vals vienés | Federico García Lorca | 11-15-23 |

| 太白金星有点烦 | 马伯庸 | 11-12-23 |

| Todesfuge | Paul Celan | 11-03-23 |

| Kongres Futurologiczny | Stanisław Lem | 10-28-23 |

| 许三观卖血记 | 余华 | 10-23-23 |

| The Conquest of Happiness | Bertrand Russell | 10-20-23 |

| 芙蓉镇 | 古华 | 10-15-23 |

| A Pale View of Hills | Kazuo Ishiguro | 10-09-23 |

| The Gods Themselves | Isaac Asimov | 10-05-23 |

| Divina Commedia | Dante Alighieri | 09-27-23 |

| The Protestant Ethic and the Spirit of Capitalism | Max Weber | 09-13-23 |

| La Poétique de l'Espace | Gaston Bachelard | 08-29-23 |

| Cosmos | Carl Sagan | 08-28-23 |

| 世界3:图像志文献库 | 巫鸿 | 08-15-23 |

| 山海经 | 佚名 | 08-05-23 |

| Ethnic America: A History | Thomas Sowell | 07-15-23 |

| The Craft of Research, Fourth Edition | Wayne C. Booth | 06-21-23 |

| Grokking Artificial Intelligence Algorithms | Rishal Hurbans | 06-21-23 |

| To Kill a Mocking Bird | Harper Lee | 06-21-23 |

| Socrates' Defense | Plato | 06-13-23 |

| The Birth Of Tragedy | Friedrich Wilhelm Nietzsche | 06-08-23 |

| 讲了很久很久的中国妖怪故事 | 张云 | 06-05-23 |

| D is for Digital | Brian W. Kernighan | 06-05-23 |

| Ego & Id | Sigismund Schlomo Freud | 05-28-23 |

| Morte accidentale di un anarchico | Dario Fo | 05-22-23 |

| 浪潮之巅(第四版) | 吴军 | 05-15-23 |

| 2001: A Space Odyssey | Arthur C. Clarke | 05-07-23 |

| The Art of loving | Erich Fromm | 05-05-23 |

| 可能性的艺术 | 刘瑜 | 04-16-23 |

| 倾城之恋 | 张爱玲 | 04-11-23 |

| 中国妖怪故事(全集) | 张云 | 04-06-23 |

| The Hot Zone | Richard Preston Jr. | 03-30-23 |

| pandas数据处理与分析 | 耿远昊 | 03-28-23 |

| 往復書簡 限界から始まる | 上野千鶴子 | 03-20-23 |

| The Grasshopper: Games, Life, and Utopia | Bernard Suits | 03-19-23 |

| The Minority Report | Philip K. Dick | 03-12-23 |

| Le Deuxième Sexe | Simone de Beauvoir | 02-16-23 |

| Peasant Life in China | Xiaotong Fei | 02-12-23 |

| 命运 | 蔡崇达 | 01-20-23 |

| The Remains of the Day | Kazuo Ishiguro | 01-02-23 |

| Im Westen nichts Neues | Erich Maria Remarque | 11-28-22 |

| 聖母 | 秋吉理香子 | 10-28-22 |

| El jardín de senderos que se bifurcan | Jorge Luis Borges | 10-20-22 |

| 玩具修理者 | 小林泰三 | 10-10-22 |

| De la démocratie en Amérique | Alexis de Tocqueville | 09-21-22 |

| 너의 여름은 어떠니 | 김애란 | 08-23-22 |

| A Story of Ruins | Wu Hung | 07-29-22 |

| One Two Three... Infinity | G.Gamov | 06-28-22 |

| C++ Primer Plus | Stephen Prata | 06-08-22 |

| The OpenMP Common Core: Making OpenMP Simple Again | Timothy G.Mattson | 06-04-22 |

| 東京貧困女子 | 中村篤彦 | 06-05-23 |

| Call Me by Your Name | André Aciman | 05-14-22 |

| The Hitchhiker's Guide to the Galaxy | Douglas Adams | 05-06-22 |

| Unlikely Pilgrimage of Harold Fry | Rachel Joyce | 04-30-22 |

| 鲁迅杂文集 | 鲁迅 | 04-17-22 |

| サド侯爵夫人 | 三島由紀夫 | 03-31-22 |

| The Ph.D. Grind | Philip J. Guo | 03-28-22 |

| Information and Knowledge Organisation in Digital Humanities | Koraljka Golub | 03-27-22 |

| The Long Goodbye | Raymond Chandler | 03-24-22 |

| 西潮 | 蒋梦麟 | 03-08-22 |

| Being Mortal | Atul Gawande | 02-25-22 |

| IBICUS | Pascal Rabaté | 01-31-22 |

| Ensaio sobre a Cegueira | José Saramago | 01-27-22 |

| 崖山 | 吕玻 | 01-15-22 |

| 哲学家们都干了些什么 | 林欣浩 | 01-13-22 |

| Solaris | Stanisław Lem | 01-05-22 |

| Political Order and Political Decay | Francis Yoshihiro Fukuyama | 12-31-21 |

| El Retorno | Roberto Bolaño | 12-30-21 |

| Selected Poems of George Baron | George Gordon Byron | 12-22-21 |

| Alpha...directions | Jens Harder | 12-19-21 |

| The Origins of Political Order | Francis Yoshihiro Fukuyama | 12-18-21 |

| Auschwitz: A New History | Laurence Rees | 12-12-21 |

| To the Lighthouse | Virginia Woolf | 12-09-21 |

| The £1,000,000 Bank Note and Other Stories | Mark Twain | 12-01-21 |

| Записки из подполья | Фёдор Миха́йлович Достое́вский | 11-19-21 |

| Le città invisibili | Italo Calvino | 11-12-21 | Flatland: A Romance of Many Dimensions | Edwin A. Abbott | 11-07-21 |

| The Murder of Roger Ackroyd | Agatha Christie | 11-04-21 |

| 乡土中国 | 费孝通 | 10-27-21 |

| 谈美 | 朱光潜 | 10-24-21 |

| The Nightingale & the Rose | Oscar Wilde | 10-23-21 |

| The Painted Veil | W. Somerset Maugham | 10-19-21 |

| Чернобилска молитва | Svetlana Alexandrovna Alexievich | 10-18-21 |

| Lord of Light | Roger Zelazny | 10-14-21 |

| 箱庭図書館 | 乙一 | 10-04-21 |

| Poems New and Collected | Maria Wisława Anna Szymborska | 10-02-21 | 论语别裁 | 南怀瑾 | 09-30-21 |

| 论语译注 | 杨伯峻 | 09-30-21 |

| 生死场 | 萧红 | 09-30-21 |

| 了凡四训 | 袁了凡 | 09-27-21 |

| On Liberty | John Stuart Mill | 09-27-21 |

| Le Mythe de Sisyphe | Albert Camus | 09-24-21 |

| Смерть Ивана Ильича | Leo Tolstoy | 09-22-21 |

| The Royal Game | Stefan Zweig | 09-21-21 |

| The End of Eternity | Isaac Asimov | 09-14-21 |

| The Order of Time | Carlo Rovelli | 09-12-21 |

| The Order of Time | Carlo Rovelli | 09-12-21 |

| 人间词话 | 王国维 | 09-08-21 |

| Lying on the Couch | Irvin Yalom | 09-06-21 |

| 中国历代政治得失 | 钱穆 | 08-26-21 |

| Sens | Marc-Antoine Mathieu | 08-15-21 |

| Also sprach Zarathustra | Friedrich Wilhelm Nietzsche | 08-14-21 |

| Klara and the Sun | Kazuo Ishiguro | 08-10-21 |

| 心に折り合いをつけて うまいことやる習慣 | 中村恒子 | 08-02-21 |

| Counselling For Toads: A Psychological Adventure | Robert de Board | 07-23-21 |

| 猫城记 | 老舍 | 07-23-21 |

| La Peste | Albert Camus | 07-15-21 |

| Kinder-und Hausmärchen | Grimms Märchen | 07-08-21 |

| 桶川ストーカー殺人事件 | 清水潔 | 07-06-21 |

| Le Petit Prince | Antoine de Saint-Exupéry | 06-26-21 |

| OPUS | Satoshi Kon | 06-25-21 |

| K.O. à Tel Aviv | Asaf Hanuka | 06-24-21 |

| Tout Seul | Christophe Chabouté | 06-22-21 |

| Jolies Ténèbres | Marie Pommepuy | 06-19-21 |

| 3 Secondes | Marc-Antoine Mathieu | 06-18-21 |

| Demian: die geschichte von emil sinclairs jugend | Hermann Hesse | 06-14-21 |

| Educated: A Memoir | Tara Westover | 06-05-21 |

|

Design and source code from Jon Barron's website |